Weak supervision

You've set up some really great heuristics, and are now wondering how well they work together? Easy - we can now apply weak supervision to create automated and denoised labels.

What is weak supervision?

Weak supervision is a technique that allows you to combine multiple heuristics to create a single, automated label. The radicality lies in the fact that you don't need to label the training data entirely by yourself, but only a part of it. Then, you can use the heuristics you've already set up to create labels for your training data. This way, you can create a training set that is much larger than the one you would have created by labeling the data yourself. The key idea is that labeling functions don't need to be perfectly accurate, they can be noisy. You can combine multiple heuristics that you have already set up to create a single, denoised label.

Use in refinery

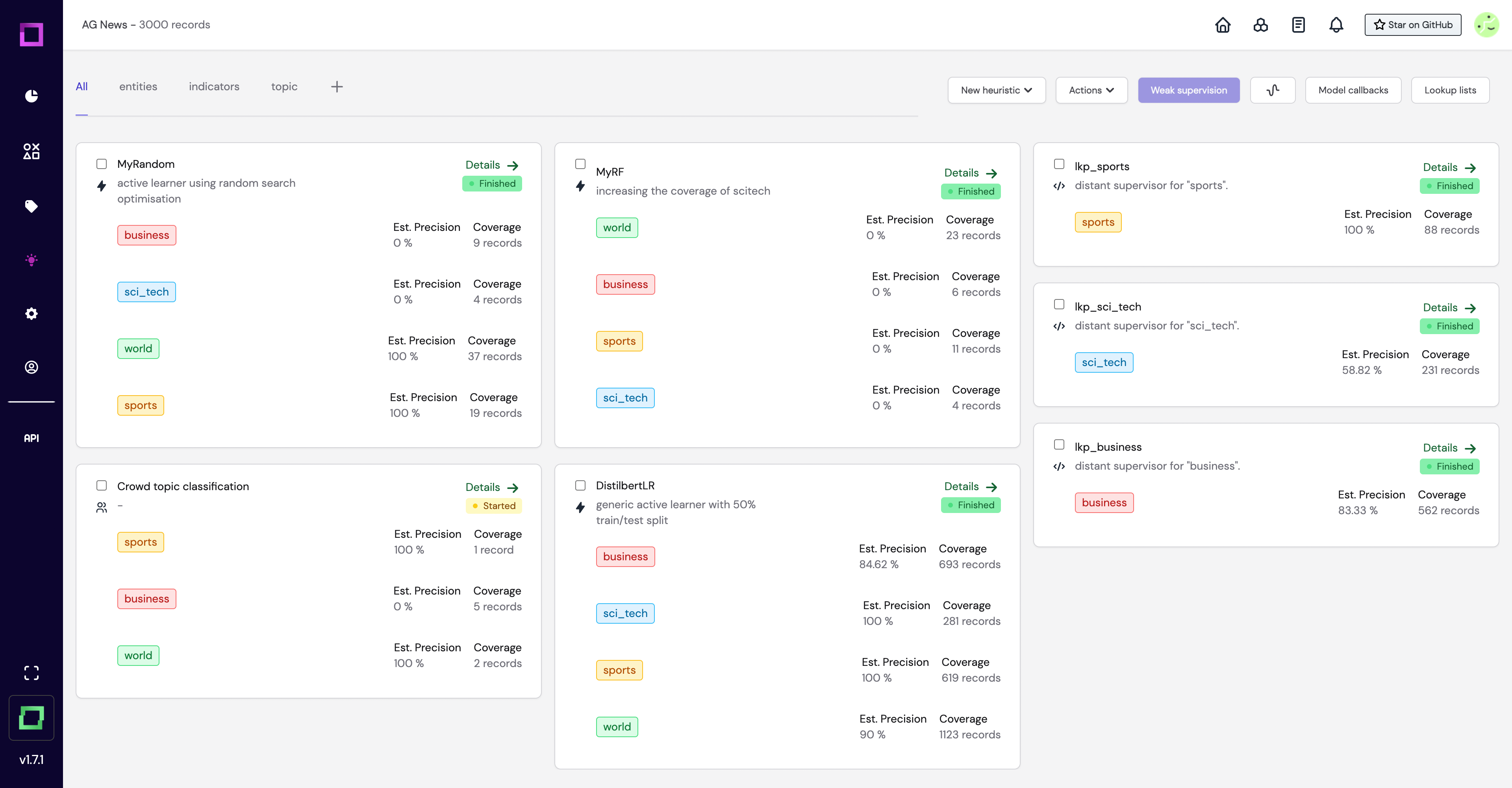

Let us go through an example to see how weak supervision works in refinery. We will use the same example as in the heuristics section. You can start off by navigating to the heuristics page. Make sure you have some heuristics created beforehand. If you don't, you can create some by following the heuristics section.

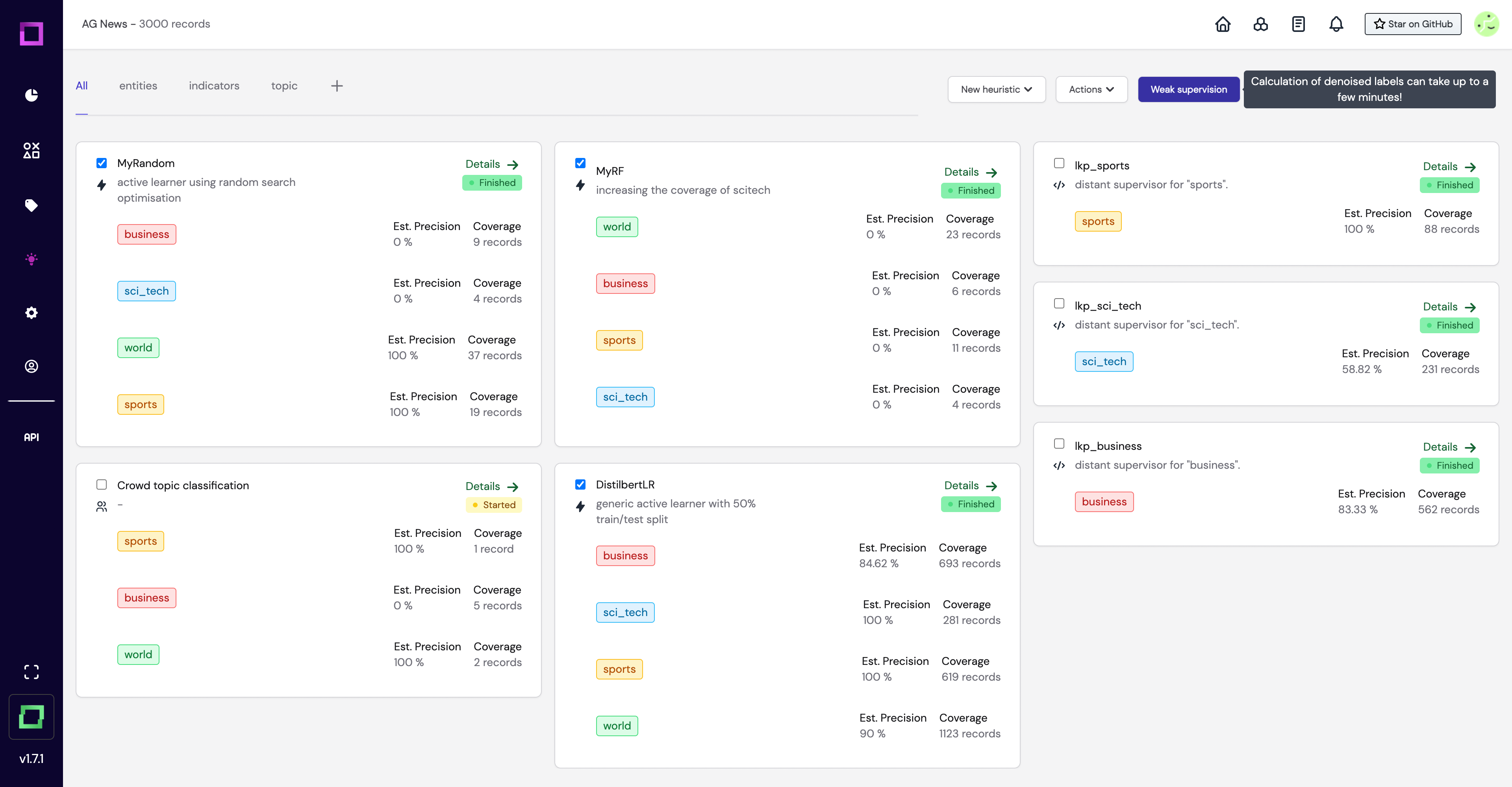

Now select the heuristics you want to combine, simply by clicking the checkbox. Once you've done that, press the "Weak supervision" button. This will start the weak supervision process.

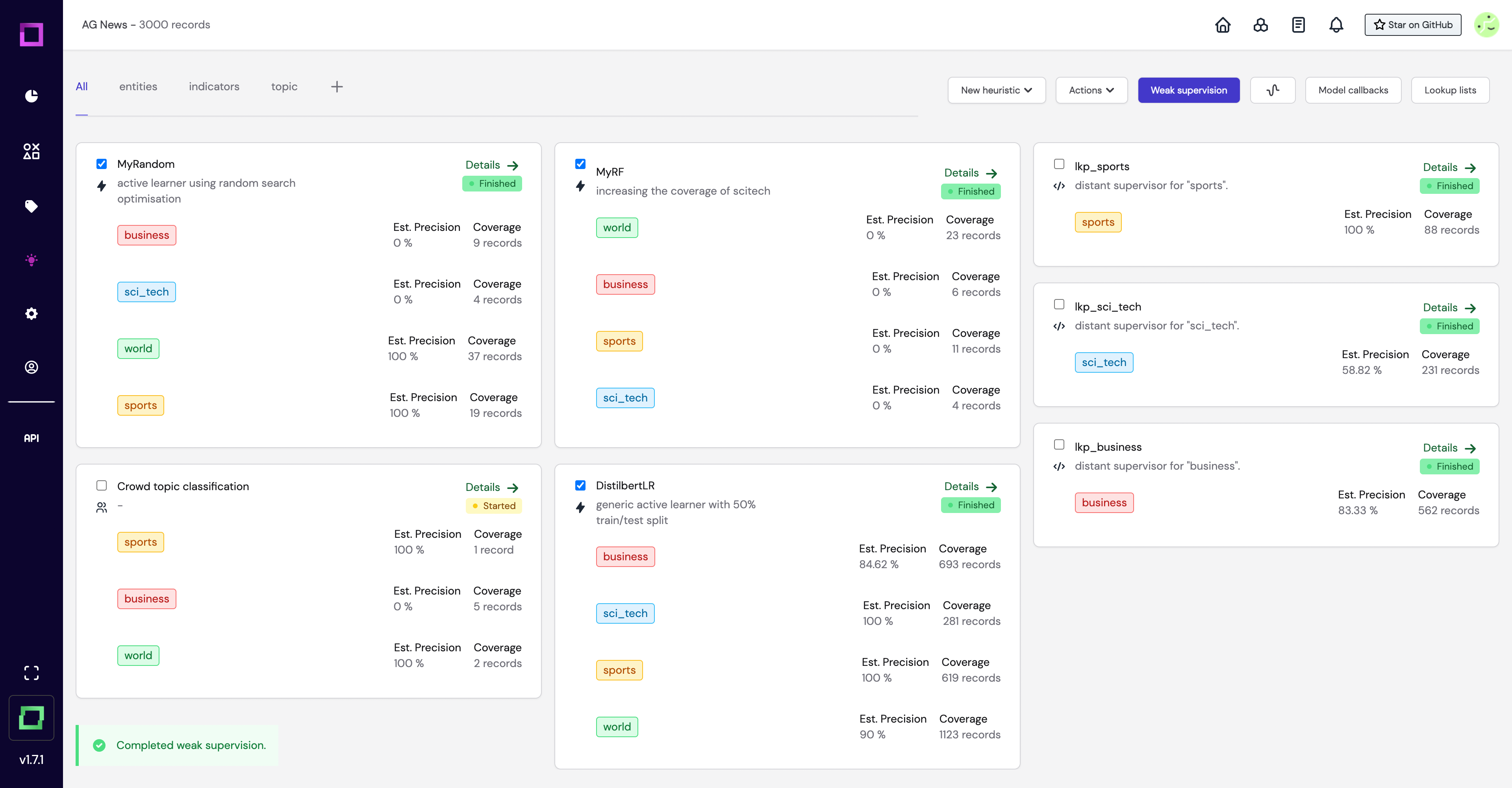

That's it! It usually also takes up only a few seconds to compute the labels. After the process is finished, you will get a notification prompt on the bottom left of the page notifying whether or not it is completed or ran into some error.

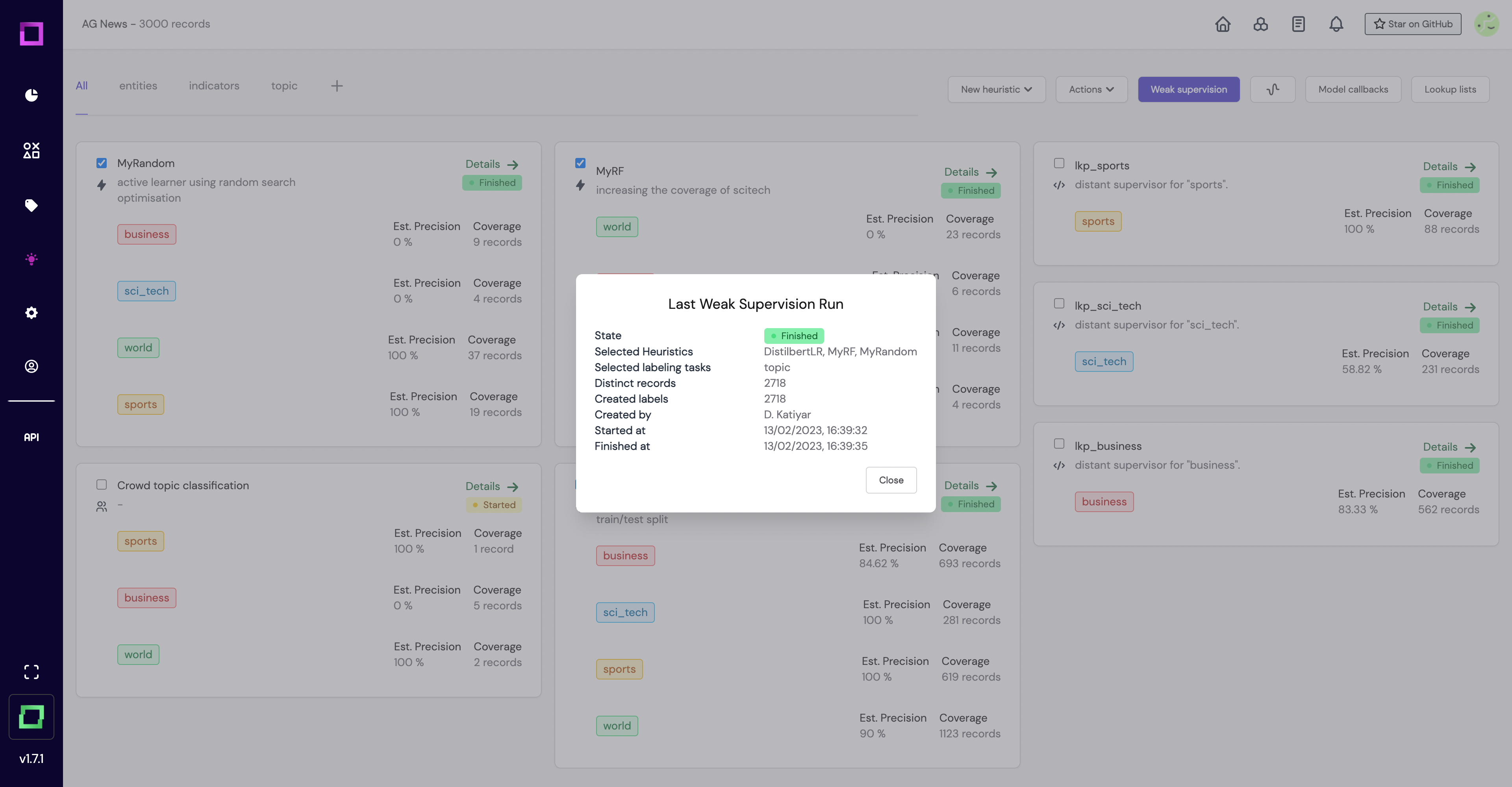

The button on the right side of the "Weak supervision" button summarizes the last weak supervision run you've executed. If you want some details, you can open a modal by pressing that button.

Now that your remaining data has been labeled, you can have an overview of the performance of the weak supervision by taking a look into the label and confidence distributions and the confusion matrix.

Let's jump directly into the monitoring to see the quality of our weakly supervised labels.