Model callbacks

Currently only available for classification models. We're testing this feature on classification models for now. If it is widely requested, we'll add a callback for extraction models.

Model callbacks allow you to feed predictions (and their confidences) of trained models into refinery, where they can be used for filtering and data slice creation. Consider this another great tool for quality control as it allows you to create validation slices where the production model disagrees with the manual or weakly supervised label.

For documentation on the exact usage with examples, please look at the relevant section on the GitHub readme of the refinery SDK. There are many different wrappers already available, e.g. HuggingFace, PyTorch, or Sklearn.

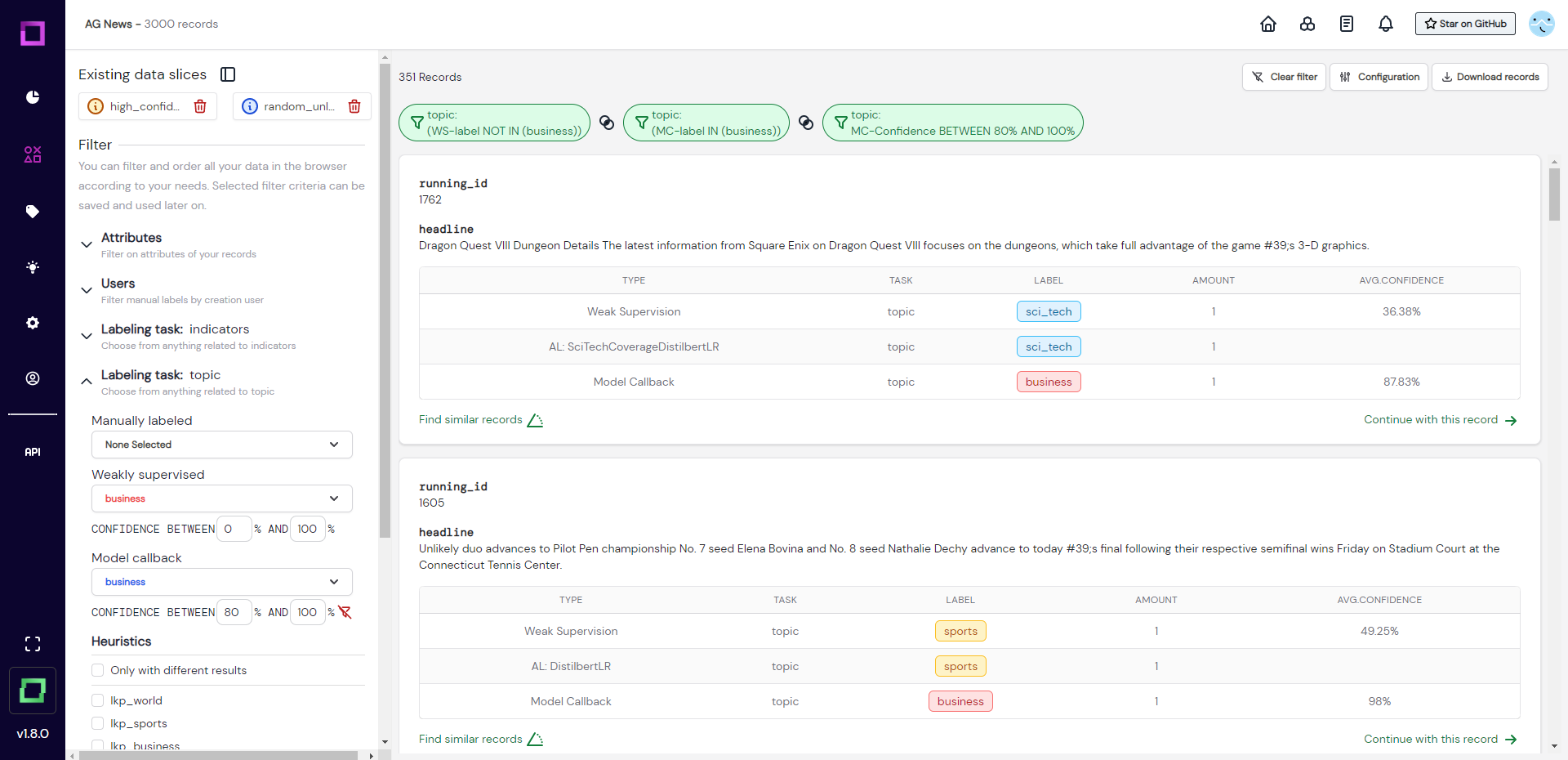

Once you uploaded your predictions to refinery, you can access the model callbacks on the heuristics page (see Fig. 1).

Fig. 2 shows how the user can use callbacks in combination with manual labels to find incorrect model predictions with a high confidence. This filter setting also goes both ways, as it simultaneously is a way to detect labeling mistakes.